One of the most common problems I run into in while building Spotfire projects is requests that are too large. They begin simply with a request for data or a modification to an existing project. However, it quickly balloons into more and more until we’ve created a monstrously huge project. One of these monsters jumped on my desk last August, and I’ve been working on it since then with a few stops and starts. Last night, I wrapped up a week of work in Midland. I was about to shut down my machine when I realized I wanted to write up what I’ve learned about how to increase the speed of delivery in these monster project situations.

Now, I work primarily with Spotfire and Alteryx, but the tips I want to share are system agnostic and can be applied to increase the speed of delivery in many different types of projects. Before diving in, I want to provide context for my project.

Project Background

The original request came from our completions group to modify an existing Spotfire project created by another user. They wanted to update the existing visualizations to be more user-friendly, add in a few additional data sets, and create visualizations for key metrics used in monthly reporting. Now, I didn’t expect this to be simple. The data sets were coming from WellView, which is a highly normalized database. I knew I would need to string together many tables and deal with data of varying levels of granularity, but it was nothing I haven’t done before. On the positive side, I was happy to have a project to start from and not have to build from scratch.

We ran into several complications over the following months including:

- A WellView upgrade that modified tables, columns, and general architecture.

- Additional scope meant duplicating another project within this project (with modifications of course).

- Shifting priorities that took my efforts elsewhere for about 6 weeks.

With that said, here are my top tips for increasing the speed of delivery in large projects.

Tip No 1

Never Under Estimate the Impact of Load Time

The project I inherited as a starting point was beefy. I don’t recall the exact load time, but it was one of those where you hit open (or reload) and then found another task to work on for 20 minutes (best guess). I wasn’t thrilled with this, but I left it as is because I didn’t want to risk modifying someone else’s content, and I saw rebuilding existing content as wasted time. After all, the users hadn’t asked me to make it faster.

That was a mistake the first time I made it. Then, the scope of the project increased to incorporate content from another project. Adding in those tables slowed it down even more, but again, I didn’t want to rebuild or modify content another user created.

Day after day, load time ate away at my productivity, my energy, and my creativity. Not only did I have to suffer the load time when opening the project but anytime I wanted to reload data, which was fairly often since it was a work in progress. Eventually, I realized that not rearchitecting the project was going to lead to utter failure, so I bit the bullet and rebuilt it entirely. More about that in tip no 2.

Thus, tip no 1 is never underestimate the impact of long load times. Long load times are not just a default to be tolerated. They will significantly slow down delivery. Shorten them as much as possible to increase speed of delivery. I wrote an entire series on Data Shop Talk about load times in Spotfire. Here’s a link to the first post. I have more thoughts on this subject since writing the series and will eventually expand on it.

Tip No 2

Always Review & Optimize Architecture

The project in its original form didn’t have a lot of duplication or overlap in data. However, it did have some inconsistencies that I didn’t like but I accepted, at first. For example, our WellView data warehouse has a header table called wvwellheader with, you guessed it, well header data (ex. well name, field name, county). We also have a view with a similar name and columns. When the developer built this file, sometimes they grabbed header data from the table and sometimes from the view. At first, this was just an inconsistency that annoyed my OCD. Then, the WellView upgrade happened, and not all of the views were updated correctly. Inconsistency turned into bad data.

Next, I added additional content from another developer’s project. This introduced a TON of overlap and additional inconsistency in the data. Not only did it slow down the project, but it added confusion. I now had multiple start and end dates and the same data in different tables. Every time I had a question about a number, I had to trace it back to the source. I tried writing documentation to avoid that but I had to go thru the same process over and over. This bogged down progress significantly, until….

Eventually, I rearchitected the project with an Alteryx workflow that feeds into Spotfire. In Alteryx, I made sure to rename every single column so that the source and content are clear and user-friendly. Since doing so, I haven’t had to trace my data back to the source. I know what the data is by the column name. Did it take a lot of time? Yeah, it did, and I had to redo every single visualization the other developer created because the tables in the project were different. But, by rearchitecting and rebuilding, I developed a much deeper understanding of the data.

Thus, tip no 2 is to review and optimize the project architecture. Architect a solution that is efficient and clear in both the table structure and individual columns. If you don’t, the bad architecture will eventually slow down delivery in one way or another.

Tip No 3

Start with Data Quality

Past experience has taught me to set project stage gates. We can’t move on to data set number 2 if we haven’t QAQCed and approved data set number 1. If you wait to do it all in the end, it will be done poorly or not at all. In my project, because the data quality was problematic, we sat at the same stage gate for a very long time. We would review data, find issues, correct them, review it again, and find new issues. Repeat…over and over. I needed to improve the data quality to increase speed of delivery.

Back on target! You can assume data quality will be problematic if any of the following apply:

- Data is entered by hand.

- Controls don’t exist to maintain quality or consistency (ex. any date can be entered, even dates far, far into the future. )

- The system uses text entry fields rather than pick lists. User A can enter “Halliburton” and user B can enter “haliburton llc.”

Only when I built out a Spotfire project solely for reviewing bad data every day were we able to move forward. Now, a tech gets an email from Spotfire Automation Services each morning directing them to bad data. It gets fixed, and metrics stay on track. The day after I created the QAQC project, I opened it up and could see everything had been fixed because we made it visible. I was overjoyed.

Thus, pro tip no 3 is to start with data quality. Reviewing bad data over and over again will slow down your delivery, so address it at the start.

Tip No 4

Shorten the Feedback Cycle

I used to work projects like this…

- Users tell me what they want.

- I build it bit by bit. When a bit is ready, I tell them it’s ready (usually by email).

- They review it at their convenience.

- They tell me what they want to be changed or fixed (usually by email).

- I fix it or change it.

- Repeat

Now, these are folks who are working active drilling and completions operations. I didn’t want to take up any more of their time than I had to, but this results in slow delivery. Let’s just say it.

So, what’s a better method? The key is to speed up the feedback cycle. When I’m ready, I schedule an hour of time with the user(s) to get immediate feedback. There are different ways to do this depending on how familiar they are with the project and/or if you are working with an individual or a team. You can simply observe them working thru the project or you can guide them. You can work with users one at a time or do a joint feedback session. The important parts are this…

- Do it sooner rather than later.

- Do it often.

- Get their hands dirty. When their hands are on the mouse and keys, they are thinking about the data rather than what they are doing after you show them the project.

Thus, tip no 4 is to shorten the feedback cycle with interactive feedback sessions where the users have their hands on the project.

Tip No 5

Don’t Wait for Project Completion to Deliver

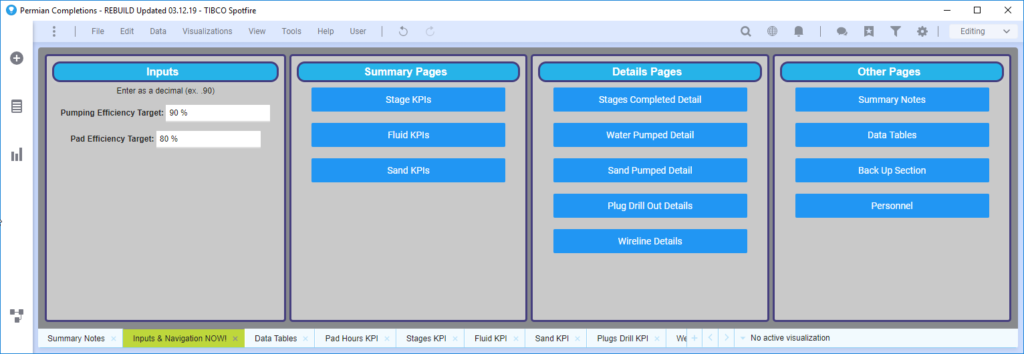

It might not feel like it, but it’s okay to deliver an incomplete project, as long as what is delivered is functional and includes quality data. For example, the project I am working on has 8 data tables and 35 pages. Of those 35 pages, the data in 12 of them is verified and the content is ready. I created a navigation page as shown below with buttons to hit the verified pages. Each page has a button that navigates back here.

If you really want to keep them out of your work in progress, change the navigation method to History Arrows, which only allow users to click buttons or go forward and backward in an analysis.

Now, this might be a little risky, but it delivers some content to users sooner rather than later and really helps increase speed of delivery. It’s important to get them into the project and using it. That usage generates critical feedback necessary to give them a quality product. Of course, I’ll keep a backup copy just in case.

Thus, tip no 5 is don’t wait for project completion to deliver. Deliver as the content is ready and let them use it.

Summary

In summary, use the following 5 tips to increase speed of delivery in analytics projects.

- Never underestimate the impact of load time

- Always review and optimize the architecture

- Start with data quality

- Shorten the feedback cycle

- Don’t wait until project completion to deliver

If you have any tips of your own, please feel free to leave a comment.

If you enjoyed this, check the posts I wrote after attending the Gartner conference in 2019.

Pingback: Key Messages from the Gartner Analytics Conference -- Make Efficient, Automate, Then Innovate • The Analytics Corner